Introduction

Risk hierarchy

General-purpose AI models

Rules for AI systems

Application of the Act

Penalties

Competent authorities and Single Point of Contact

National public authorities for fundamental rights supervision

EU Single Information Platform - AI Act Service Desk

EU Digital Omnibus

Further information

Introduction

The EU Artificial Intelligence (AI) Act is an EU regulation which entered into force on 2 August 2024 and is directly applicable across the EU. The regulation applies in a phased manner over 36 months from entry into force.

The regulation is designed to provide a high level of protection to people’s health, safety and fundamental rights, and to promote the adoption of human-centric, trustworthy AI. It will provide a harmonised regulatory framework for AI systems placed on the market, or deployed, in the EU.

The Act applies equally to uses of AI in the public service as to the private sector. However, it provides exemptions for certain applications of AI relating to national defence; national security; scientific R&D; R&D for AI systems, models; open-sourced models; and personal use.

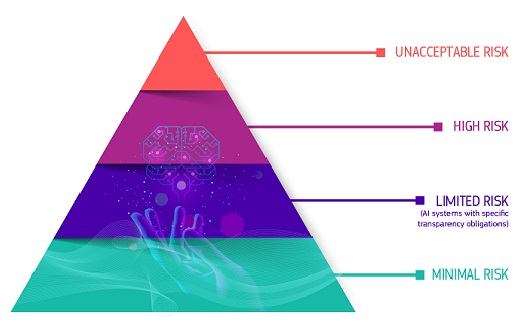

The Act is not a blanket regulation applying to all AI systems. Rather, it adopts a risk-based approach to regulation, based on four risk categories in order to ensure that its measures are targeted and proportionate. The Act also makes allowance for the unique circumstances of SMEs. The four risk categories are described in the next section.

Risk hierarchy underpinning the EU AI Act

Source: European Commission

Unacceptable risk

These are a set of eight harmful uses of AI that contravene EU values because they violate fundamental rights. These AI uses will be prohibited and include:

- subliminal techniques likely to cause a person, or another, significant harm

- exploiting vulnerabilities due to age, disability or social or economic situation

- social scoring leading to disproportionate detrimental or unfavourable treatment

- profiling individuals for prediction of criminal activity

- untargeted scraping of facial image

- inferring emotions in work or education

- biometric categorisation of race, religion, sexual orientation

- real-time remote biometric identification for law enforcement purposes

The prohibitions on uses with unacceptable risk apply from February 2025. Reference guidelines for the application of these provisions of the AI Act have been published by the European Commission:

Guidelines on prohibited AI practices as defined by the AI Act

High risk

The first category relates to the use of AI systems that are safety components of products, or which are themselves products, falling within the scope of certain Union harmonisation legislation listed in Annex I of the Act, for example toys and machinery.

The provisions on product-linked high-risk AI systems apply from August 2027.

The second category relates to eight specific high-risk uses of AI listed in Annex III of the Act. These are AI systems that potentially create an adverse impact on people's safety or their fundamental rights, as protected by the EU Charter of Fundamental Rights, and are high-risk.

The provisions on high-risk uses apply from August 2026. You can view classifications rules for high-risk AI systems on AI Act Explorer.

Specific transparency risk

The AI Act introduces specific transparency requirements for certain AI applications where, for example, there is a clear risk of manipulation such as the use of chatbots or deepfakes. You can view Article 50 for transparency obligations at Article 50: Transparency obligations for providers and deployers of certain AI systems.

Minimal risk

Most AI systems will only give rise to minimal risks and consequently, can be marketed and used subject to the existing legislation without additional legal obligations under the AI Act.

General-purpose AI models

General-purpose AI (GPAI) models, often referred to as foundational models, exhibit significant generality and are capable of competently performing a wide range of distinct tasks. As a consequence of this power, they pose unique risks, including systemic risks to the EU. For this reason, there are specific provisions and obligations applying to GPAI models under the AI Act. The European Commission, through its AI Office, is responsible for enforcing these provisions.

Further information on GPAI modules can be found on the AI Act Single Information Platform.

Rules for AI systems

The AI Act introduces rules for certain AI systems placed on the market, or deployed, in the EU to mitigate the risks to people’s health, safety and fundamental rights. These rules relate to, certification of AI systems’ conformance with standards; governance of AI systems during development; and supervision of systems when in use.

The key responsibilities under the Act lie with providers and deployers of AI systems. However, there are also responsibilities for other actors in the supply chain: importers, distributors, and authorised representatives.

Application of the Act

The provisions of the EU AI Act apply in a phased manner from its entry into force in August 2024.

Key milestones include:

Member states must identify public bodies which supervise or enforce fundamental rights in relation to the use of high-risk AI systems referred to in Annex III and make the list publicly available.

Related press release: Key milestone in the implementation of the EU regulation on AI

Rules on prohibited AI practices come into effect.

Providers and deployers must ensure their staff have a sufficient level of AI literacy.

Member states must designate competent authorities for the purposes of the Act.

Member states must legislate for penalties for infringements of the Act.

Member states must have an operational AI Regulatory Sandbox to support innovation.

Rules on high-risk AI systems (use cases - Annex III) come into effect.

Rules on high-risk AI systems (products/Annex I) come into effect.

Penalties

For infringements of the rules for AI systems, the EU AI Act sets out the maximum applicable penalties as below:

- up to €35 million or 7% of the total worldwide annual turnover of the preceding financial year (whichever is higher) for infringements on prohibited practices or non-compliance related to requirements on data

- up to €15 million or 3% of the total worldwide annual turnover of the preceding financial year for non-compliance with any of the other requirements or obligations of the Act

- up to €7.5 million or 1.5% of the total worldwide annual turnover of the preceding financial year for the supply of incorrect, incomplete or misleading information to notified bodies and national competent authorities in reply to a request from a competent authority

For each category of infringement, the threshold would be the lower of the two amounts for SMEs, and the higher for other companies.

Competent authorities and Single Point of Contact (SPoC)

The Irish Government decided that Ireland adopt a distributed model of implementation of the EU AI Act, building on the capacity and expertise of established sectoral regulators.

A new AI Office of Ireland will be established as a central and coordinating authority, by August 2026, for the implementation of the AI Act in Ireland. It will provide a focal point for the promotion and adoption of transparent and safe AI in Ireland, to ensure that we fully capture the strategic opportunity that AI presents.

The AI Office acting as the central coordinating authority for the AI Act will:

- co-ordinate Competent Authority activities to ensure consistent implementation of the EU AI Act

- serve as the single point of contact for the EU AI Act

- facilitate centralised access to technical expertise by the other competent authorities, as required

- drive AI innovation and adoption through the hosting of a regulatory sandbox and act as a focal point for AI in Ireland, encompassing regulation, innovation and deployment

The Competent Authorities currently designated under the EU AI Act are:

- Central Bank of Ireland

- Coimisiún na Meán

- Commission for Communications Regulation

- Commission for Railway Regulation

- Commission for Regulation of Utilities

- Competition and Consumer Protection Commission

- Data Protection Commission

- Health and Safety Authority

- Health Products Regulatory Authority

- Health Services Executive

- Marine Survey Office of the Department of Transport

- Minister for Enterprise, Tourism and Employment

- Minister for Transport

- National Transport Authority

- Workplace Relations Commission

Public authorities which supervise or enforce obligations protecting fundamental rights

Article 77 of the AI Act requires that Member States of the EU identify national public authorities which supervise or enforce the respect of obligations under Union law protecting fundamental rights, including the right to non-discrimination, in relation to certain high-risk uses of AI systems specified in the Act. Under the Act, fundamental rights are those enshrined in the EU Charter of Fundamental Rights, including democracy, the rule of law and environmental protection.

Ireland’s list of fundamental rights authorities identified in accordance with Article 77 of the AI Act is as follows:

These authorities are not Competent Authorities for the AI Act, nor are any obligations, responsibilities or tasks assigned to them under the AI Act.

Rather, these authorities will get additional powers to facilitate them in carrying out their current mandates in circumstances involving the use of AI systems. For example, the authorities will have the power to access documentation that developers and deployers of AI systems are required to hold under the AI Act. These powers will apply from 2 August 2026.

EU Single Information Platform - AI Act Service Desk

The European Commissions’ Single Information Platform, provides online interactive tools to help stakeholders determine whether they are subject to legal obligations and understand the steps they need to take to comply.

The platform is part of the EU AI Act Service Desk, launched on 8 October 2025 as a central initiative to help stakeholders navigate the AI Act requirements. It serves as an accessible, up-to-date information hub offering clear guidance on the AI Act’s application.

The AI Act Compliance Checker is a tool crafted to clarify the obligations and requirements of the AI Act.

EU Digital Omnibus

The EU Digital Omnibus (19 November 2025) proposes targeted amendments across the EU's data protection, data sharing, cybersecurity and artificial intelligence legislative frameworks to reduce duplicative obligations and clarify how the rules interact. The proposed changes aim to reduce compliance costs for businesses throughout the EU, support competitiveness and drive innovation.

It is a first step to optimise the application of the digital rulebook.

Further details on the Digital Omnibus can be found on Digital Package - Shaping Europe’s digital future.

Further information

AI Act - European Commission

AI Act Q&As - European Commission

Full text of the AI Act: Regulation (EU) 2024/1689

European AI Office - European Commission

AI literacy

Introduction to AI Course - increase your awareness of AI, learn about the EU AI Act and support adoption of AI in your organisation:

Skills and training - AI for You - CeADAR

Single point of contact

Further guidance and clarification is expected from the European Commission over the coming months. Matters not addressed in the information above can be directed to AIinfo@enterprise.gov.ie.

These pages are provided for information purposes only, and do not constitute a legal interpretation of the EU AI Act. Parties should consult the Act directly, and when necessary, obtain professional advice.